How you can apply Automation to accelerate release cycles, improve quality, safety and governance?

Last Tuesday I participated in an online panel on the subject of CD Automation, as part of Continuous Discussions (#c9d9), a series of community panels about Agile, Continuous Delivery and DevOps. Watch a recording of the panel:

https://www.youtube.com/watch?v=2-_bb9Q-rtw

Continuous Discussions is a community initiative by Electric Cloud, which powers Continuous Delivery at businesses like SpaceX, Cisco, GE and E*TRADE by automating their build, test and deployment processes.

Below are a few insights from my contribution to the panel:

Automation != Orchestration

Automation. There’s really a big opportunity, if I want to start talking about automation, people think about many things that are in automation, but in this context, for Continuous Delivery, it is the process of taking your application, getting it deployed faster, with the right set of quality gates, with the right set of people who will help you automate that process seamlessly. So in turn, it’s more about making sure your application is being deployed more frequently, with the right quality gates in it.

Fundamentally, the process, what you look at is this: you take your application and you build it, then you test it, make sure that the build is good. When you build it, then you deploy to a set of environments – what you think your test or your dev or your staging, your environments, then you test it again. So it’s that balance of how you build your application and test it.

And being a test architect, I’m obviously an advocate of quality, and I think there’s three things people have to figure out when they start thinking about Continuous Delivery: one, the velocity of things – how fast you deploy, two, the quality side of things – what set of quality gates you need to put in place when you build and deploy an application, and the last thing you have to figure out is after you have velocity, and you have your quality, what is the cost of doing things? What set of tool sets you have to use to make all of these things work together?

We have many teams here, and there could be many things and many people, but in terms of our orchestration, what you think about is, what is the current process that prevents us from deploying and what are those manual things that we can avoid. It’s a blend of how long does it take for us to build and deploy a current application, what are the manual processes involved in doing that? Now let’s try to dissect each one of them, and make things easier, because let’s be honest, if it takes you a long time to build your application, that’s going to be a big bottleneck for you to have this Continuous Delivery pipeline.

And on top of that, is, being in the airline industry, one other thing we have to think about when we start thinking about this CD process is the idea of having the right set of guidelines for it, or standards behind it. So if it takes your application, let’s say, minutes to build, hopefully not an hour, just a couple of minutes. Are there any current tool sets, or are there any current technologies we can utilize to make that process much faster, avoid the manual effort of doing it? I’ll give you a perfect example:

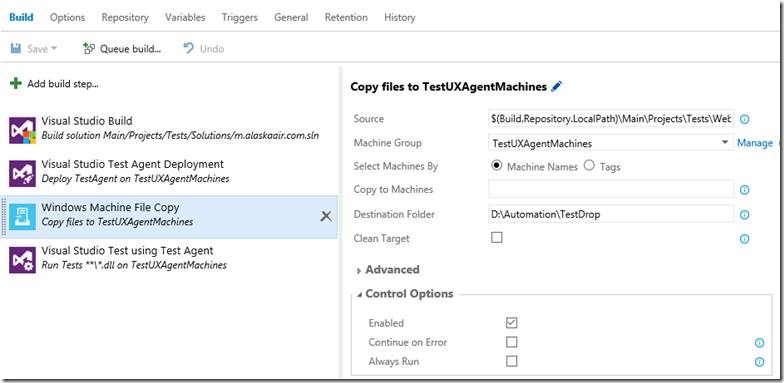

Before, I think it was pretty typical for us to deploy application to utilize scripts, some PowerShell scripts, and some batch files, ” x-copy this, copy that…” And certainly now in the world of technologies you really don’t have to do that because we have tools, there’s software that allows you to build and take those bits and automatically deploy them. You just need to utilize the right task. So those are the small things in terms of orchestration that you need to start thinking about. Then on top of that, OK, then what would I need for us to make sure that this current application when we deploy it has the right set of quality gates behind it?

So, looking at those different processes, orchestration, flow, helps a lot. I would strongly suggest taking a look at what your application does and how long does it take to build, and if there’s any way that can you minimize the amount of time to build and manually do it – automate it. There are many tools out there that can help you do it. (Too many…)

We’re using the same thing. You actually have the same orchestration processes, the only thing you have to think about differently is – don’t create a process where it’s dependent on each environment, think about your application when you deploy it, it targets any environment. You can be deploying, you can be using the same processes on a test environment, or a QA environment, but you shouldn’t be developing a process that is different from one environment to the other. Your process orchestration should target any environment, so anyone can take that same process, spin up a new environment, and follow through the same processes over again, utilizing the same quality gates in between.

Also, when you start talking about testing, there’s the traditional way of testing applications where you do a lot of the manual stuff, and sometimes you have a hundred, two hundred, three hundred test cases. Sometimes you have to think smartly when you start doing CD processes. For example, which test make sense to deploy this build, and does it cover the right set of test families? And a good way to tell that is this, and if you have an analytics in your organization, think about which of those scenarios are being utilized more by your customers.

Sometimes you create test cases and automate them just for the sake of automating, but what value do you get out from those automated test cases, if some of those test cases are not even used a lot? So optimize your test, take a look at the data, what you have for your app, and try to dissect that, and think about ways to make your tests smarter, and make that part of your process.

Challenges and Checkpoints of CD Pipelines

as.com, our main website, it’s a great group of people by the way – the way we approach software is – what is the primary reason we’re doing CD? It’s because we’re doing agile development, taking one step at a time, and based on the experience we have in continuing to observe and evaluate, we have a process where you make things easier for people to integrate with each other.

For example, the tools – pick a tool that can easily be used by people, and it works well for the development, the testing and the PM team. Because, one of the challenges is, for example, (and before you even talk about the tools, I want to go one step back), it’s understanding the culture. I think that is the big thing there, to understand the culture change, what people need to do and embrace that, changes are inevitable.

I’ll give you a good example: we’re all use to doing waterfall. Back in the old days, where you hand it off to someone, and hand it off to someone… Now when you start something – everyone’s engaged at that point of view, at that point of time. When I use the set of tools, there are things you have to sacrifice in between, that you’re not accustomed to, for example, for testing, traditionally, you probably still see it from time to time, but some test teams will still create test plans, and the superior views, when you try to do CD, you try to waste some of that time away, and, so, for doing web automation, tackle it at a good point, try to do less – there is a great article out there that talks about the testing pyramid. Where at the very bottom of the base you talk about the coverage of unit testing, in the middle layer you talk about services, then the top layer would be UX automation. Less emphasis on UX automation, more thorough test in the unit test, that way you get more coverage.

So my point is there are certain teams that use different tool sets for testing, while dev and PM use a different set of for managed work, so when the time comes to integrate and do this agile CD approach, what happens is no one has time to learn these tools. A developer will not have time to understand what tool that tester is using to automate stuff, and likewise for PM and other folks, and DevOps, and infrastructure. So think about a tool that will work well for the team, as that is one of the things that made it challenging for us, and even now we’re still evaluating some of these tools. There are many tools, and given that we’re in a world with a lot of open source, you can certainly take advantage of that, and take a look at what people are using out there: tools, culture, the process, also think about, when you start, when you’re doing Continuous Delivery, sometimes you think moving so fast, velocity, orchestration – but think about the small things that really help improve your app – security, performance testing. So my suggestions is, one of key challenges there, is, make sure you have a good monitoring system in place, when you start doing your CD process. Continue to monitor what your application is doing, even though you deploy it to a test environment, because I guarantee you there are certain things that will get exposed, when you actually have the right monitoring system in place.

I’ll take it as a startup company, where you got the walk, crawl, run phase – the walk phase is like this “Let’s do it!” Let’s get the right tools sets, let’s get it working, let’s go with it, yes, you’re delivering faster, you’re learning caveats, you’re learning some of these points where, “Oh we need to go back…” but the issue is, as you move forward, you start seeing more deficiencies, and a lot more people not standardizing the things. People do things differently, in fact, since we’re talking about Continuous Delivery, I should say people are delivering software differently from one team to the other. It becomes very challenging to standardize some things, not only in the tool sets but simply on the process as well.

Because one person can do the flow differently, then you have a different set of quality gates, then you have a different set of tools. We’re still learning, but at the end of the day – let’s be honest, you will not satisfy everybody. You’re not going to satisfy every team out there, but at least try to get a consensus of small things, standard things that worked well with everybody.

We still have teams that use different tools sets, but it’s not it’s not like one team is different from the other – now we have a big organization using the same tools, and that’s a good start, and we have another organization using the same tools, that’s a good start, then we have another organization using the same tool sets – now we have a lot of people talking with each other, just take it to the next level. Because the other thing to consider is while you’re standardizing the things, the big benefit of doing that as well is cost, you’re able to save the company money by standardizing certain things that worked well for you. So now you’re hitting two birds with one stone – obviously the next thing is, people will get more enticed to collaborate, share best practices, and provide governance around certain things that worked well for us.

Being in quality, quality is my forte, I can talk about web automation the whole day – unit testing, that kind of stuff, but certain people need to realize that when you do test automation there are certain things that you use to automate things that may not work with people. But at least try to establish a standard that will work well in terms of governance of the process, what you do about it, so making things easier for tracking and auditing is the other thing you have to consider.

Tools: Tips and Tricks

I’ll just make this straight – if I had one tip, when talking about Continuous Delivery, we know we want to be Agile, we want to release faster with quality. So have everybody be accountable of quality. If you think about quality from the get go, when you start doing Continuous Delivery, you’ll start expanding of all these tool sets, you’ll be able to define a process, you’ll be able to define your orchestration. In fact, it will actually even help determine what tools you will need to use, if you start thinking about quality at the get go, as part of your process, it’s mandatory.

I can’t stress this enough, when you talk about Continuous Delivery it’s all about release, release, release… features, features, features… at the end, you’ll spend more time thinking about fixing defects or bugs.

And even tools, sometimes tools don’t necessarily have the right set of features that help you deal with quality. So you keep everybody accountable of quality, because this is very important when you start thinking about Continuous Delivery out to your production servers. You’ll be able to write down the list of things, when you start thinking about quality: monitoring, automation, tools sets, orchestration, security, performance. If I had one big tip, it’s to have everybody be accountable for quality, it’s a shared practice by everybody, not by just the test team.