One great feature that I like in NUnit is the capability to use collection types for data driven tests. Meaning, you don’t have to open up an external data source connection, pull data and use it to drive parameters for your tests. With a simple attribute in NUnit, you can drive tests as indicated here:

TestCaseSourceAttribute

http://www.nunit.org/index.php?p=testCaseSource&r=2.5.3

NUnit implementation allows you to enumerate from a collection to data drive your tests. MSTest has the same extensibility and is outlined in the following blog:

Extending the Visual Studio Unit Test Type

http://blogs.msdn.com/b/vstsqualitytools/archive/2009/09/04/extending-the-visual-studio-unit-test-type-part-1.aspx

From this blog, I was able to go through the steps and process how MSTest invokes and passes objects in a test method. When MSTest executes, the flow goes through:

- TestClassExtensionAttribute calls : GetExecution()

- TestExtensionExecution calls : CreateTestMethodInvoker(TestMethodInvokerContext context)

- ITestMethodInvoker calls : Invoke(params object[] parameters)

Throughout this process, you can use custom attributes and utilize attribute properties for passing in test data. Its best that you use custom attributes in the ITestMethodInvoker.Invoke()

In my solution, I want to develop a fast way of invoking IEnumerable<object> as my test data. In this case, I’ll consume custom attributes to provide a classname and methodname to return test data through reflection. I’ll then use that in ITestMethodInvoker.Invoke() to enumerate objects for my tests.

- ClassName: class holding the method to generate test data

- DataSourceName: method within the class that generates any test data

Project Setup:

Make sure that you have the following references in your project:

- Microsoft.VisualStudio.QualityTools.Common.dll

- Microsoft.VisualStudio.QualityTools.UnitTestFramework

- Microsoft.VisualStudio.QualityTools.Vsip.dll

These assemblies are included as part of the .Net framework. Simply browse in the references section in your project

The Custom Attribute:

[global::System.AttributeUsage(AttributeTargets.Method, Inherited = false, AllowMultiple = false)]

public class EnumurableDataSourceAttribute : Attribute

{

public string DataSourceName { get; set; }

public string ClassName { get; set; }

public EnumurableDataSourceAttribute(string className, string dataSourceName)

{

this.DataSourceName = dataSourceName;

this.ClassName = className;

}

}

Test Class Implementation:

[Serializable]

public class TestClassCollectionAttribute : TestClassExtensionAttribute

{

public override Uri ExtensionId => new Uri("urn:TestClassAttribute");

public override object GetClientSide()

{

return base.GetClientSide();

}

public override TestExtensionExecution GetExecution()

{

return new TestExtension();

}

}

Test Extension:

public class TestExtension : TestExtensionExecution

{

public override void Initialize(TestExecution execution)

{

}

public override ITestMethodInvoker CreateTestMethodInvoker(TestMethodInvokerContext context)

{

return new TestInvokerMethodCollection(context);

}

public override void Dispose()

{

}

}

Test Method Invoker:

public class TestInvokerMethodCollection : ITestMethodInvoker

{

private readonly TestMethodInvokerContext _context;

public TestInvokerMethodCollection(TestMethodInvokerContext context)

{

Debug.Assert(context != null);

_context = context;

}

public TestMethodInvokerResult Invoke(params object[] parameters)

{

Trace.WriteLine($"Begin Invoke:Test Method Name: {_context.TestMethodInfo.Name}");

Assembly testMethodAssembly = _context.TestMethodInfo.DeclaringType.Assembly;

object[] datasourceattributes = _context.TestMethodInfo.GetCustomAttributes(typeof (EnumurableDataSourceAttribute), false);

Type getclasstype = testMethodAssembly.GetType(((EnumurableDataSourceAttribute)datasourceattributes[0]).ClassName);

MethodInfo getmethodforobjects = getclasstype.GetMethod(((EnumurableDataSourceAttribute) datasourceattributes[0]).DataSourceName);

/*

Use the line below if there are parameters that needs to be passed to the method.

ParameterInfo[] methodparameters = getmethodforobjects.GetParameters();

To instantiate a new concreate class

object classInstance = Activator.CreateInstance(getclasstype, null);

Invoke(null,null) = The first null parameter specifies whether it's a static class or not. For static, leave it null

IEnumerable<object> enmeruableobjects = getmethodforobjects.Invoke(classInstance, null) as IEnumerable<object>;

*/

IEnumerable<object> enmeruableobjects = getmethodforobjects.Invoke(null, null) as IEnumerable<object>;

var testresults = new TestResults();

//This is where each object will be enumarated for the test method.

foreach (var obj in enmeruableobjects)

{

testresults.AddTestResult(_context.InnerInvoker.Invoke(obj), new object[1] { obj });

}

var output = testresults.GetAllResults();

_context.TestContext.WriteLine(output.ExtensionResult.ToString());

return output;

}

}

Test Project Setup:

Once, you’ve successfully build your assembly project (custom TestClass attribute), you need to register the custom extension class in your local machine. This is a custom test assembly/adapter so, we’ll need to:

- Make changes to the registry

- Add the compiled assembly in the install directory for your VS version. In my case, I’m using Visual Studio 2015 so your custom assemblies will be copied to: C:\Program Files (x86)\Microsoft Visual Studio 14.0\Common7\IDE\PrivateAssemblies

Luckily, there’s a batch script that lets you do all of these steps. The only thing you need to do is:

- Change the version of VS to your working VS edition

- Change the assembly namespace and class reference

The deployment script: (You can also download the deployment script from this blog. Scroll at the bottom of the blog post)

http://blogs.msdn.com/b/qingsongyao/archive/2012/03/28/examples-of-mstest-extension.aspx?CommentPosted=true

@echo off

::------------------------------------------------

:: Install a MSTest unit test type extension

:: which defines a new test class attribute

:: and how to execute its test methods and

:: interpret results.

::

:: NOTE: Only VS needs this and the registration done; the xcopyable mstest uses

:: TestTools.xml virtualized registry file updated which we already have done in sd

::------------------------------------------------

setlocal

:: All the files we need to copy or register are realtive to this script folder

set extdir=%~dp0

:: Get 32 or 64-bit OS

set win64=0

if not "%ProgramFiles(x86)%" == "" set win64=1

if %win64% == 1 (

set vs14Key=HKEY_LOCAL_MACHINE\SOFTWARE\Wow6432Node\Microsoft\VisualStudio\14.0

) else (

set vs14Key=HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\VisualStudio\14.0

)

:: Get the VS installaton path from the Registry

for /f "tokens=2*" %%i in ('reg.exe query %vs14Key% /v InstallDir') do set vsinstalldir=%%j

:: Display some info

echo.

echo =-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-==-=-=-=-=-=-=-=-=-=-

echo Please ensure that you are running with adminstrator privileges

echo to copy into the Visual Studio installation folder add keys to the Registry.

echo Any access denied messages probably means you are not.

echo =-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-=-==-=-=-=-=-=-=-=-=-=-

echo.

echo 64-bit OS: %win64%

echo Visual Studio 14.0 regkey: %vs14Key%

echo Visual Studio 14.0 IDE dir: %vsinstalldir%

::

:: Copy the SSM test type extension assembly to the VS private assemblies folder

::

set extdll=AAG.Test.Core.CustomTestExtenstions.dll

set vsprivate=%vsinstalldir%PrivateAssemblies

echo Copying to VS PrivateAssemblies: %vsprivate%\%extdll%

copy /Y %extdir%%extdll% "%vsprivate%\%extdll%"

::

:: Register the extension with mstest as a known test type

:: (SSM has two currently, both are in the same assembly)

::

echo Registering the unit test types extensions for use in VS' MSTest

:: Keys Only for 64-bit

if %win64% == 1 (

set vs14ExtKey64=HKEY_LOCAL_MACHINE\SOFTWARE\Wow6432Node\Microsoft\VisualStudio\14.0\EnterpriseTools\QualityTools\TestTypes\{13cdc9d9-ddb5-4fa4-a97d-d965ccfc6d4b}\TestTypeExtensions

set vs14_configExtKey64=HKEY_LOCAL_MACHINE\SOFTWARE\Wow6432Node\Microsoft\VisualStudio\14.0_Config\EnterpriseTools\QualityTools\TestTypes\{13cdc9d9-ddb5-4fa4-a97d-d965ccfc6d4b}\TestTypeExtensions

)

:: Keys for both 32 and 64-bit

set vs14ExtKey=HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\VisualStudio\14.0\EnterpriseTools\QualityTools\TestTypes\{13cdc9d9-ddb5-4fa4-a97d-d965ccfc6d4b}\TestTypeExtensions

set vs14_configExtKey=HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\VisualStudio\14.0_Config\EnterpriseTools\QualityTools\TestTypes\{13cdc9d9-ddb5-4fa4-a97d-d965ccfc6d4b}\TestTypeExtensions

:: Register the TestClassCollectionAttribute

set regAttrName=TestClassCollectionAttribute

set regProvider="AAG.Test.Core.CustomTestExtenstions.TestClassCollectionAttribute, AAG.Test.Core.CustomTestExtenstions"

if %win64% == 1 (

reg add %vs14ExtKey64%\%regAttrName% /f /v AttributeProvider /d %regProvider%

reg add %vs14_ConfigExtKey64%\%regAttrName% /f /v AttributeProvider /d %regProvider%

)

reg add %vs14ExtKey%\%regAttrName% /f /v AttributeProvider /d %regProvider%

reg add %vs14_ConfigExtKey%\%regAttrName% /f /v AttributeProvider /d %regProvider%

:eof

endlocal

exit /b %errorlevel%

Creating the tests in VS:

In your test project, add a reference to the custom MSTest Assemblies

In your tests, make sure to use the custom test class and enumerator attribute that we previously defined. Here’s a sample of these test methods that uses different IEnumerable objects.

[TestClassCollection]

public class MethodCollectionTests

{

public TestContext TestContext { get; set; }

[TestInitialize()]

public void TestInit()

{

}

[TestMethod]

[EnumurableDataSourceAttribute("CustomTestExtenstions.Tests.Helper.Helper", "Get5Employees")]

public void Verify5Employees(Employee employee)

{

Assert.IsFalse(String.IsNullOrEmpty(employee.Displayname));

Console.WriteLine($"Employee FirstName: {employee.Displayname}");

TestContext.WriteLine($"Test Case Passed for {TestContext.TestName} with Data: {employee.Displayname}");

}

[TestMethod]

[EnumurableDataSourceAttribute("CustomTestExtenstions.Tests.Helper.Helper", "Get20Employees")]

public void Verify20Employees(Employee employee)

{

Assert.IsFalse(String.IsNullOrEmpty(employee.Displayname));

Console.WriteLine($"Employee FirstName: {employee.Displayname}");

TestContext.WriteLine($"Test Case Passed for {TestContext.TestName} with Data: {employee.Displayname}");

}

[TestMethod]

[EnumurableDataSourceAttribute("CustomTestExtenstions.Tests.Helper.Helper", "Get5Cars")]

public void Verify5Cars(Car car)

{

Assert.IsNotNull(car);

Assert.IsFalse(String.IsNullOrEmpty(car.Description));

Console.WriteLine($"Car Info: Type: {car.CarType} Cost: {car.Cost.ToString("C")}");

TestContext.WriteLine($"Test Case Passed for {TestContext.TestName} with Data: {car.CarType} with Id: {car.Id}");

}

[TestMethod]

[EnumurableDataSourceAttribute("CustomTestExtenstions.Tests.Helper.Helper", "Get13Cars")]

public void Verify13Cars(Car car)

{

Assert.IsNotNull(car);

Assert.IsFalse(String.IsNullOrEmpty(car.Description));

Console.WriteLine($"Car Info: Type: {car.CarType} Cost: {car.Cost.ToString("C")}");

TestContext.WriteLine($"Test Case Passed for {TestContext.TestName} with Data: {car.CarType} with Id: {car.Id}");

}

[TestMethod]

[EnumurableDataSourceAttribute("CustomTestExtenstions.Tests.Helper.Helper", "Get5EmployeesWithCars")]

public void Verify5EmployeesWithCars(EmployeeWithCar employeeWithCar)

{

Assert.IsNotNull(employeeWithCar);

Assert.IsFalse(String.IsNullOrEmpty(employeeWithCar.Id));

Assert.IsNotNull(employeeWithCar.Car);

Assert.IsNotNull(employeeWithCar.Employee);

TestContext.WriteLine($"Test Case Passed for {TestContext.TestName} with Data: Name: {employeeWithCar.Employee.Displayname} Car: {employeeWithCar.Car.CarType} with Id: {employeeWithCar.Employee.Id}");

}

[TestMethod]

[EnumurableDataSourceAttribute("CustomTestExtenstions.Tests.Helper.Helper", "Get10SequentialInts")]

public void Verify10SequentialInts(int intcurrent)

{

Assert.IsInstanceOfType(intcurrent, typeof(int));

TestContext.WriteLine($"Current int Value: {intcurrent}");

}

[TestMethod]

[EnumurableDataSourceAttribute("CustomTestExtenstions.Tests.Helper.Helper", "Get5StringObjects")]

public void VerifyGet5StringObjects(string stringcurrent)

{

Assert.IsInstanceOfType(stringcurrent, typeof(string));

TestContext.WriteLine($"Current string Value: {stringcurrent}");

}

}

The Helper class defines the helper methods to generate test data:

public static class Helper

{

public static IEnumerable<object> Get5Employees()

{

var employees = GenerateData.GetEmployees(5);

return (IEnumerable<object>) employees;

}

public static IEnumerable<object> Get20Employees()

{

var employees = GenerateData.GetEmployees(20);

return (IEnumerable<object>) employees;

}

public static IEnumerable<object> Get5Cars()

{

var cars = GenerateData.GetCars(5);

return (IEnumerable<object>) cars;

}

public static IEnumerable<object> Get13Cars()

{

var cars = GenerateData.GetCars(13);

return (IEnumerable<object>)cars;

}

public static IEnumerable<object> Get5EmployeesWithCars()

{

var cars = GenerateData.GetCars(5);

var employees = GenerateData.GetEmployees(5);

var employeeswithcars = new List<EmployeeWithCar>();

for (int i = 0; i < cars.Count; i++)

{

var employeewithcar = new EmployeeWithCar

{

Car = cars[i],

Employee = employees[i]

};

employeeswithcars.Add(employeewithcar);

}

return (IEnumerable<object>)employeeswithcars;

}

public static IEnumerable<object> Get10SequentialInts()

{

var intobjects = new int[] {1, 2, 3, 4, 5,6,7,8,9, 10};

return (IEnumerable<object>) intobjects.Cast<object>();

}

public static IEnumerable<object> Get5StringObjects()

{

var stringobjects = new string[] {"String1", "String2", "String3", "String4", "String5" };

return (IEnumerable<object>)stringobjects.Cast<object>();

}

}

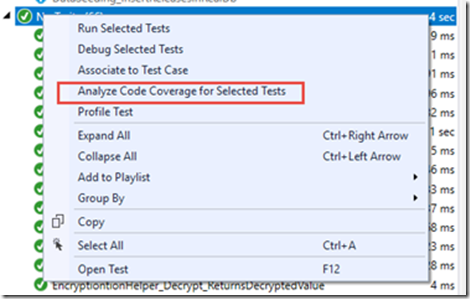

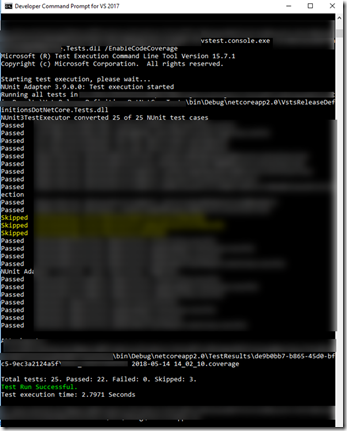

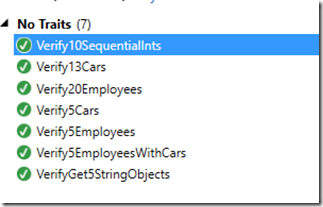

The Execution results!

And the output for each of the result!