When you work with EF Core, the initial project creation requires that you select a .Net Core project. This is all good if you’re entirely working with a .Net Core App. What about .Net Frameworks 4.6 and above? How about starting with a .Net Core app without EF Core? There are multiple guides out there, scattered in multiple places to get EF Core installed and working. This post is intended to have you install EF Core at minimum and walk through the setup and unit testing scenarios.

As of this post, we’re utilizing Entity Framework 2.0 within .Net Framework 4.7 projects. Entity Framework Core 2.0 is compatible with versions of .Net Framework 4.6.x and above. Why use EF Core on .Net Framework projects? Simply put, compatibility reasons. Certain services in azure (Azure Functions for example) currently supports .Net Framework projects and not .Net Core. To circumvent the problem, the EF team has done a great job with EF Core so it can work with various .Net versions. While the intention of this post is adopting EF Core 2.0 within .Net 4.7, the goal is to show the features built in EF Core 2.0. In particular, I really love the capability to unit test databases through in memory channel. We’ll talk about this later.

If this is your first time working through Entity Framework, I strongly suggest going through the “Get Started Guide”, see the following article from Microsoft: https://docs.microsoft.com/en-us/ef/core/get-started/

Let’s get started: Create a .Net 4.7 Project

In Visual Studio, create a new project:

Install the following Nuget Packages for EF Core:

Microsoft.EntityFrameworkCore – Core EF libraries

Microsoft.EntityFrameworkCore.Design – The .NET Core CLI tools for EF Core

Microsoft.EntityFrameworkCore.SqlServer – EF Core Database Provider for SQL. In this case, we’ll be using SQL EF Core Provider

Microsoft.EntityFrameworkCore.Relational– EF Core Libraries that allows EF to be used to access many different databases. Some concepts are common to most databases, and are included in the primary EF Core components. Such concepts include expressing queries in LINQ, transactions, and tacking changes to objects once they are loaded from the database. NOTE: If you install Microsoft.EntityFrameworkCore.SqlServer, this will automatically install the Relational assemblies

Microsoft.EntityFrameworkCore.Tools – EF Core tools to create a model from the database

Microsoft.EntityFrameworkCore.SqlServer.Design– EF Core tools for SQL server

Microsoft.EntityFrameworkCore.InMemory – EF Core In-memory database provider for Entity Framework Core (to be used for testing purposes). This is or will be your best friend! One of the reasons why I switched to EF Core (besides it’s other cool features). You only need to install this package if you’re doing Unit Testing (which you should!)

Edit the Project Files:

Edit the project file and make sure the following entry appears in the initial property group.

<PropertyGroup>

<AutoGenerateBindingRedirects>true</AutoGenerateBindingRedirects>

</PropertyGroup>

For test projects, also make sure the following entry is also present:

<GenerateBindingRedirectsOutputType>true</GenerateBindingRedirectsOutputType>

Implementing EF Core

I’m not going to go through the basics of EF Core. I’ll skip the entire overview and just dive deep on implementing EF entities and using the toolsets.

The Model and DBContext:

We’ll be using a simple model and context class here.

public class Employee

{

[Key]

public int EmployeeId { get; set; }

public string FirstName { get; set; }

public string LastName { get; set; }

public string DisplayName => FirstName + " " + LastName;

public EmployeeType EmployeeType { get; set; }

}

public class EmployeeType

{

[Key]

public int EmployeeTypeId { get; set; }

public string EmployeeTypeRole { get; set; }

}

public class DbContextEfCore : DbContext

{

public DbContextEfCore(DbContextOptions<DbContextEfCore> options) : base(options) { }

public virtual DbSet<Employee> Employees { get; set; }

public virtual DbSet<EmployeeType> EmployeeTypes { get; set; }

protected override void OnConfiguring(DbContextOptionsBuilder optionsBuilder)

{

//When creating an instance of the DbContext, you use this check to ensure that if you're not passing any Db Options to always use SQL.

if (!optionsBuilder.IsConfigured)

{

var connectingstring = ConfigurationManager.ConnectionStrings["SqlConnectionString"].ConnectionString;

optionsBuilder.UseSqlServer(connectingstring);

}

}

}

EF Tools

Database Migrations – This is far one of the best database upgrade tools that you can use for SQL server. Since we have our model and DBContext, let’s create the scripts to eventually create the database on any target server and update the database when necessary

Before you run any Database Migration commands, you’ll need to ensure that you have a class file that implements IDesignTimeDbContextFactory. This class will be recognized and used by entity framework to provide command line tooling such as code generation and database migrations. Before proceeding, make sure your application or web.config file has the connectingstring values set for your SQL server (see example below)

<connectionStrings>

<add name="SqlConnectionString" connectionString="Server=XXXX;Database=XXX;User ID=XXX;Password=XXX;Trusted_Connection=False;Encrypt=True;Connection Timeout=30;" providerName="System.Data.SqlClient"/>

</connectionStrings>

NOTE: Unfortunately, you cannot use InMemory Database when working with Database Migration Tools. In Memory Database doesn’t use a relational provider.

Here’s an example class file that you can use in your project:

public class DesignTimeDbContextFactory : IDesignTimeDbContextFactory<DbContextEfCore>

{

public DbContextEfCore CreateDbContext(string[] args)

{

var builder = new DbContextOptionsBuilder<DbContextEfCore>();

//Database Migrations must use a relational provider. Microsoft.EntityFrameworkCore.InMemory is not a Relational provider and therefore cannot be use with Migrations.

//builder.UseInMemoryDatabase("EmployeeDB");

//You can point to use SQLExpress on your local machine

var connectionstring = ConfigurationManager.ConnectionStrings["SqlConnectionString"].ConnectionString;

builder.UseSqlServer(connectionstring);

return new DbContextEfCore(builder.Options);

}

}

When running command line options, make sure you select the .Net Project where you are working on Entity Framework. At the same time, ensure that the default start-up project in solution explorer is set on the EF project

In Visual Studio. Go to Tools > Nuget Package Manager > Package Manager Console

In the PMC (Package Manager Console) type: Add-Migration InitialCreate

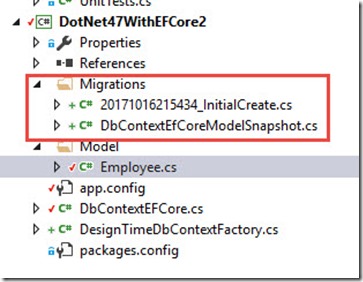

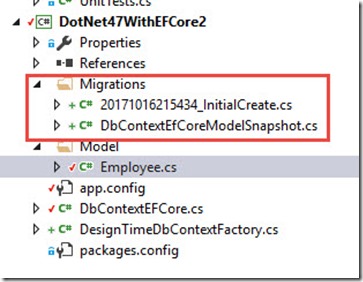

After running, this command, notice that it will create the proper class files for you to create the initial database schema.

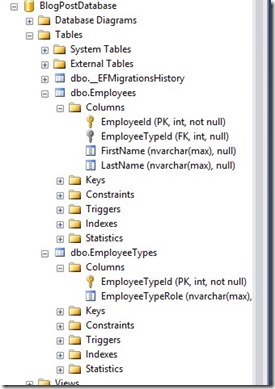

Let’s create our database on the target server. For this, run: update-database. When done, you should see the following:

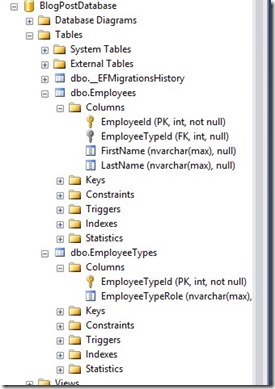

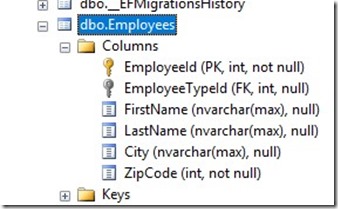

Your database has been created as well:

As we all know, requirements do change more often than before (specially in agile environments), a request was made to add the city and zip code to the employee data. This is easy as:

- Modifying the entity (model)

- Running “Add-Migration <ChangeSet>”

- Running “Update-Database”

The Entity Change:

public class Employee

{

[Key]

public int EmployeeId { get; set; }

public string FirstName { get; set; }

public string LastName { get; set; }

public string DisplayName => FirstName + " " + LastName;

public string City { get; set; }

public int ZipCode { get; set; }

public EmployeeType EmployeeType { get; set; }

}

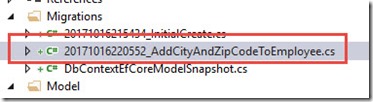

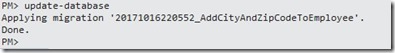

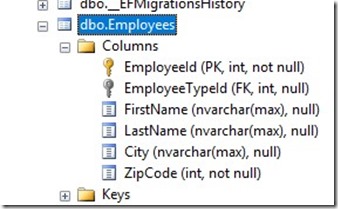

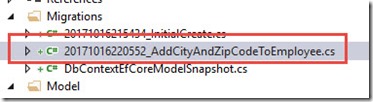

In the PMC (Package Manager Console) type: Add-Migration AddCityAndZipCodeToEmployee

New file has been created:

Schema has been added as well:

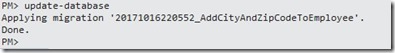

In the PMC (Package Manager Console) type: Update-Database

Changes have been applied to the database directly.

I’ll leave database migrations here. You can get more information on all the nice and nifty features of database migrations here: https://msdn.microsoft.com/en-us/library/jj554735(v=vs.113).aspx

For all command line options for database migrations: https://docs.microsoft.com/en-us/ef/core/miscellaneous/cli/powershell

Finally, Database Migrations uses the __MigrationsHistory table to store what migrations have been applied to the database.

Testing EF Core

This is my favorite topic! I can’t stress enough how easy it is to Unit Test databases using EF Core. InMemory Database as part of EF Core sets up the runtime and everything else you need to Unit Test databases. Before EF Core, it really took sometime to setup mock objects, fakes, dependencies, etc… With EF Core InMemory Database, I was able to focus more on the design of the database rather spend time focusing on the test harness. The short story is this, InMemory database is another provider in EF that stores data In Memory during runtime. Meaning, you get all the same benefits, features and functions with EF to SQL (or other provider) except with the beauty of not connecting to an actual provider endpoint (SQL in this case).

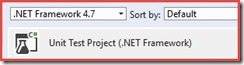

Start of by adding a new .Net Framework 4.7 Test Project

Add a reference to the previously created project with EF Core Enabled.

Add the following nuget packages:

- Microsoft.EntityFrameworkCore

- Microsoft.EntityFrameworkCore.InMemory

- Microsoft.EntityFrameworkCore.SqlServer

Edit the project file and make sure the following entry appears in the initial property group.

<PropertyGroup>

<AutoGenerateBindingRedirects>true</AutoGenerateBindingRedirects>

<GenerateBindingRedirectsOutputType>true</GenerateBindingRedirectsOutputType>

</PropertyGroup>

Test Class:

[TestClass]

public class DbContextTests

{

protected DbContextOptions<DbContextEfCore> Dboptionscontext;

[TestInitialize]

public void TestInitialize()

{

//This is where you use InMemory Provider for working with Unit Tests

//Unlike before, we're you'll need to work with Mocks such as MOQ or RhinoMocks, you can use InMemory Provider

Dboptionscontext = new DbContextOptionsBuilder<DbContextEfCore>().UseInMemoryDatabase("EmployeeDatabaseInMemoery").Options;

//Lets switch to use Sql Server

//var connectionstring = ConfigurationManager.ConnectionStrings["SqlConnectionString"].ConnectionString;

//Dboptionscontext = new DbContextOptionsBuilder<DbContextEfCore>().UseSqlServer(connectionstring).Options;

}

[TestMethod]

public void ValidateInsertNewEmployeeRecord()

{

//Employee Entity Setup

var employee = new Employee

{

FirstName = "Don",

LastName = "Tan",

City = "Seattle",

ZipCode = 98023,

EmployeeType = new EmployeeType

{

EmployeeTypeRole = "HR"

}

};

using (var dbcontext = new DbContextEfCore(Dboptionscontext))

{

dbcontext.Employees.Add(employee);

dbcontext.SaveChanges();

Assert.IsTrue(dbcontext.Employees.Any());

}

}

}

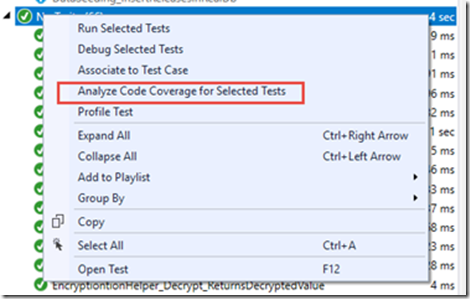

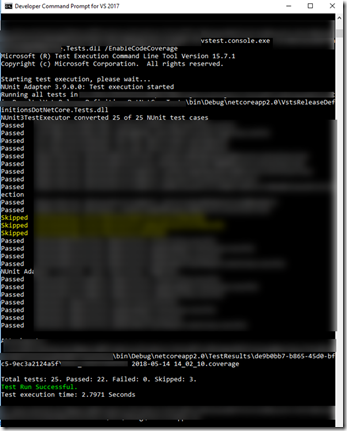

Test Initialize method is where I set the DBContext option to use InMemory vs SQL providers. When you run the tests switching either InMemory or SQL DBContext options, both tests run successfully.

Switching to Sql EF Core provider, stores the data to the DB directly:

Using InMemory provider for EF Core lets you focus unit testing your database more efficiently. This is definitely useful when working with multiple tables and each table has multiple relationships (keys and other constraints). More importantly, this lets you focus designing the appropriate DB strategies such as repository or unit of work patterns.