Azure DevOps has released YAML builds. Truthfully, I’m very excited about this. YAML builds greatly changes the landscape of DevOps practices on both CI and CD forefront. At least as of writing of this post, Microsoft has full support on YAML builds.

YAML Based Builds through Azure DevOps

Azure DevOps, particularly the build portion of the service, really encourages using YAML. You can tell this by creating a new build definition and the first option under templates is YAML. However, if you’re like me and were used to the UI, or you’re just completely new to Azure DevOps, it could be a bit confusing. In a nutshell, here’s a very simple comparison why I encourage using YAML builds:

NON YAML Based Builds (UI Generated and Managed):

- JSON Based

- Not Focused on “Build as Code”

- No source control versioning

- Shared steps require more management in different projects

- Very UI driven and once created, the challenge is to validate changes made (without proper versioning)

YAML Based Builds (Code Based Syntax):

- Modern way of managing builds that’s common in open source community

- Focused on “Build as Code” since it’s part of the Application Git Branch

- Shared Steps and Templates across different repo’s. It’s easier to centralize common steps such as Quality, Security and other utility jobs.

- Version Control!!! If you have a big team, you don’t want to keep creating builds for your branch strategy. The build itself is also branched

- Keeps the developer in the same experience.

- A step towards “Documentation As Code”. Yes, we can use Comments!!!

For more info on Azure DevOps YAML builds, see: Azure DevOps YAML Schema

For YAML specific information, see the following:

https://yaml.org/

Better Read: Relation to JSON:

https://yaml.org/spec/1.2/spec.html#id2759572

YAML’s indentation-based scoping makes ideal for programmers (comments, references, etc.…)

In this post, I’ll provide some sample build YAMLs towards a CI pipeline from developer’s perspective. Platforms used:

- Application Development Framework – .Net Core 2.2

- Hosting Environment – Docker Container

The web application is a simple Web API that is used to listen on Azure DevOps service hook events. There are associated Unit Tests that validates changes on the API so a typical process would comprise of:

- Build the application

- Run quality checks against the application (Unit tests, Code Coverage thresholds, etc…)

- If successfully, publish the appropriate artifacts to be used in the next phase (CD – Continuous Deployment)

Taking the above context, we’ll be:

- Building the .Net Core Web API (DotNetCoreBuildAndPublish.yml)

- Run Quality Checks against the Web Api (DotNetCoreQualitySteps.yml)

- Create a Docker Container for the Web Api (DockerBuildAndPublish.yml)

Job 1: Building the .Net Core Web API

parameters:

Name: ''

BuildConfiguration: ''

ProjectFile: ''

steps:

- task: DotNetCoreCLI@2

displayName: 'Restore DotNet Core Project'

inputs:

command: restore

projects: ${{ parameters.ProjectFile}}

- task: DotNetCoreCLI@2

displayName: 'Build DotNet Core Project'

inputs:

projects: ${{ parameters.ProjectFile}}

arguments: '--configuration ${{ parameters.BuildConfiguration }}'

- task: DotNetCoreCLI@2

displayName: 'Publish DotNet Core Artifacts'

inputs:

command: publish

publishWebProjects: false

projects: ${{ parameters.ProjectFile}}

arguments: '--configuration ${{ parameters.BuildConfiguration }} --output $(build.artifactstagingdirectory)'

zipAfterPublish: True

- task: PublishBuildArtifacts@1

displayName: 'Publish Artifact'

inputs:

PathtoPublish: '$(build.artifactstagingdirectory)'

ArtifactName: ${{ parameters.name }}_Package

condition: succeededOrFailed()

This YAML is straightforward, it uses Azure DevOps tasks to call .Net Core CLI and passes CLI arguments such as restore and publish. This is the most basic YAML for a .Net Core app. Also, notice the parameters section? These are the parameters needed to be passed by the calling app (Up Stream Pipeline)

CHEAT!!! So, if you’re also new to YAML builds, Microsoft has made it easier to transition from JSON to YAML. Navigate to an existing build definition, click on the job level node (not steps) then click on “View As YAML”. This literally takes all your build steps and translates them into YAML format. Moving forward, use this feature and set parameters for shared your YAML steps.

Job 2: Run Quality Checks against the Web Api

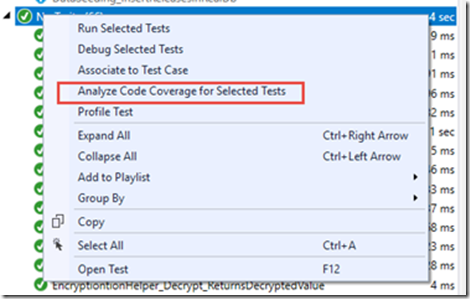

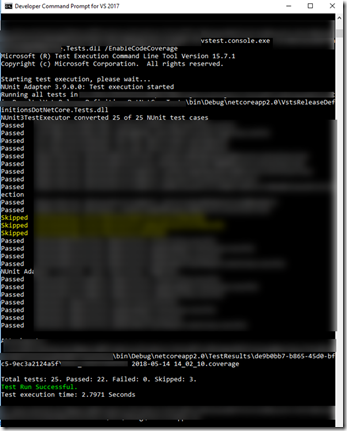

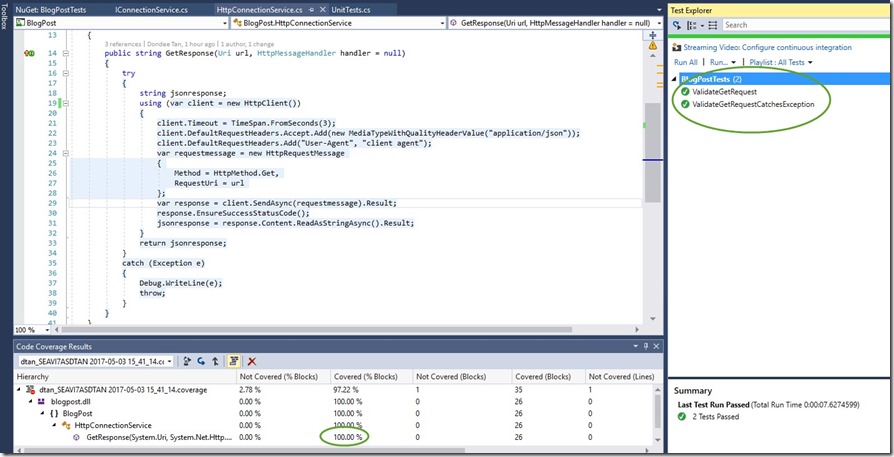

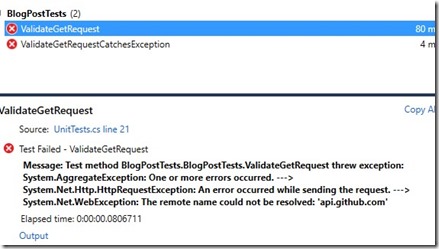

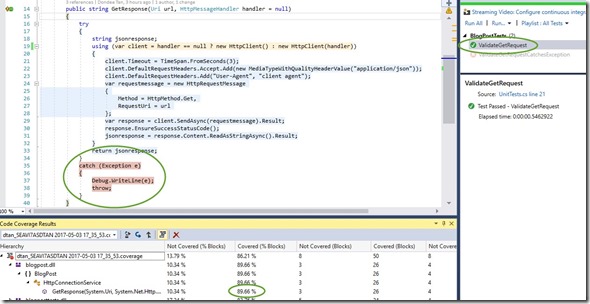

This job essentially executes any quality checks for the application. In this case both Unit Test and Code Coverage Thresholds. Again, calling existing pre-build tasks available in Azure DevOps

parameters:

Name: ''

BuildConfiguration: ''

TestProjectFile: ''

CoverageThreshold: ''

steps:

- task: DotNetCoreCLI@2

displayName: 'Restore DotNet Test Project Files'

inputs:

command: restore

projects: ${{ parameters.TestProjectFile}}

- task: DotNetCoreCLI@2

displayName: 'Test DotNet Core Project'

inputs:

command: test

projects: ${{ parameters.TestProjectFile}}

arguments: '--configuration ${{ parameters.BuildConfiguration }} --collect "Code coverage"'

- task: mspremier.BuildQualityChecks.QualityChecks-task.BuildQualityChecks@5

displayName: 'Checke Code Coverage'

inputs:

checkCoverage: true

coverageFailOption: fixed

coverageThreshold: ${{ parameters.CoverageThreshold }}

Job 3: Create a Docker Container for the Web Api

parameters:

Name: ''

dockerimagename: ''

dockeridacr: '' #ACR Admin User

dockerpasswordacr: '' #ACR Admin Password

dockeracr: ''

dockerapppath: ''

dockerfile: ''

steps:

- powershell: |

# Get Build Date Variable if need be

$date=$(Get-Date -Format "yyyyMMdd");

Write-Host "##vso[task.setvariable variable=builddate]$date"

# Set branchname to lower case because of docker repo standards or it will error out

$branchname= $env:sourcebranchname.ToLower();

Write-Host "##vso[task.setvariable variable=sourcebranch]$branchname"

# Set docker tag from build definition name: $(Date:yyyyMMdd)$(Rev:.r)

$buildnamesplit = $env:buildname.Split("_")

$dateandrevid = $buildnamesplit[2]

Write-Host "##vso[task.setvariable variable=DockerTag]$dateandrevid"

displayName: 'Powershell Set Environment Variables for Docker Tag and Branch Repo Name'

env:

sourcebranchname: '$(Build.SourceBranchName)' # Used to specify Docker Image Repo

buildname: '$(Build.BuildNumber)' # The name of the completed build which is defined above the upstream YAML file (main yaml file calling templates)

- script: |

docker build -f ${{ parameters.dockerfile }} -t ${{ parameters.dockeracr }}.azurecr.io/${{ parameters.dockerimagename }}$(sourcebranch):$(DockerTag) ${{ parameters.dockerapppath }}

docker login -u ${{ parameters.dockeridacr }} -p ${{ parameters.dockerpasswordacr }} ${{ parameters.dockeracr }}.azurecr.io

docker push ${{ parameters.dockeracr }}.azurecr.io/${{ parameters.dockerimagename }}$(sourcebranch):$(DockerTag)

displayName: 'Builds Docker App - Login - Then Pushes to ACR'

This the last job for our demo. Once Quality check passes, we essentially build a docker image and upload it to a container registry. In this case, I’m using an Azure Container Registry.

This is an interesting YAML. I’ve intentionally not used pre-built tasks from Azure DevOps to illustrate YAML capabilities by using external command sets such as Power Shell (which works across platforms) and inline script commands such as docker

First things first, docker when creating images and tags is very case sensitive. Docker has strict naming conventions and one of them is that all tags and images should be lower case. Let’s dissect these steps:

Powershell Step: I’ve added some logic here to get built-in variables from Azure DevOps build definitions. Notice that I’ve binded sourcebranchname and buildname as an environment variable from Azure DevOps built-in

I’ve added some logic here to get built-in variables from Azure DevOps build definitions. Notice that I’ve binded sourcebranchname and buildname as an environment variable from Azure DevOps built-in

'$(Build.SourceBranchName)' # Used to specify Docker Image Repo

'$(Build.BuildNumber)' # The name of the completed build which is defined above the upstream YAML file (main yaml file calling templates)

What’s next is straightforward for you “DevOps practitioners” 🙂

$branchname= $env:sourcebranchname.ToLower();

The above line is the step where I use powershell to set the branchname to all lowercase. I will use it later when calling docker commands to create and publish docker images

$buildnamesplit = $env:buildname.Split("_")

$dateandrevid = $buildnamesplit[2]

The above line is dependent on what you define as your build definition name. I used the last part of the build definition at the docker tag.

name: $(Build.DefinitionName)_$(Build.SourceBranchName)_$(Date:yyyyMMdd)$(Rev:.r)

e.g.: #Webhooks-BuildEvents-YAML_FeatureB_20190417.4

Webhooks-BuildEvents-YAML – BuildName

FeatureB – BranchName

20190417.4 – Date/Rev (Used as the Docker Tag)

You will see this build definition name defined in our upstream pipeline. Meaning, the main build YAML file that calls all these templates.

Script Step: Pretty straightforward as well. We invoke inline docker commands to: Build, Login and Push a docker image to a registry (ACR in this case). Notice this line though:

docker build -f ${{ parameters.dockerfile }} -t ${{ parameters.dockeracr }}.azurecr.io/${{ parameters.dockerimagename }}$(sourcebranch):$(DockerTag) ${{ parameters.dockerapppath }}

I’m setting the image name with a combination of both passed parameter and sourcebranch. This guarantees that new images will always be created on any source branch you’re working on.

The Complete YAML:

name: $(Build.DefinitionName)_$(Build.SourceBranchName)_$(Date:yyyyMMdd)$(Rev:.r)

trigger:

# branch triggers. Commenting out to trigger builds on all branches

branches:

include:

- master

- develop

- feature*

paths:

include:

- AzureDevOpsBuildEvents/*

- AzureDevOpsBuildEvents.Tests/*

- azure-pipelines-buildevents.yml

variables:

- group: DockerInfo

resources:

repositories:

- repository: templates # identifier (A-Z, a-z, 0-9, and underscore)

type: git # see below git - azure devops

name: SoftwareTransformation/DevOps # Teamproject/repositoryname (format depends on `type`)

ref: refs/heads/master # ref name to use, defaults to 'refs/heads/master'

jobs:

- job: AppBuild

pool:

name: 'Hosted VS2017' # Valid Values: 'OnPremAgents' - Hosted:'Hosted VS2017', 'Hosted macOS', 'Hosted Ubuntu 1604'

steps:

- template: YAML/Builds/DotNetCoreBuildAndPublish.yml@templates # Template reference

parameters:

Name: 'WebHooksBuildEventsWindowsBuild' # 'Ubuntu 16.04' NOTE: Code Coverage doesn't work on Linux Hosted Agents. Bummer.

BuildConfiguration: 'Debug'

ProjectFile: ' ./AzureDevOpsBuildEvents/AzureDevOpsBuildEvents.csproj'

- job: QualityCheck

pool:

name: 'Hosted VS2017' # Valid Values: 'OnPremAgents' - Hosted:'Hosted VS2017', 'Hosted macOS', 'Hosted Ubuntu 1604'

steps:

- template: YAML/Builds/DotNetCoreQualitySteps.yml@templates # Template reference

parameters:

Name: 'WebHooksQualityChecks' # 'Ubuntu 16.04' NOTE: Code Coverage doesn't work on Linux Hosted Agents. Bummer.

BuildConfiguration: 'Debug'

TestProjectFile: ' ./AzureDevOpsBuildEvents.Tests/AzureDevOpsBuildEvents.Tests.csproj'

CoverageThreshold: '10'

- job: DockerBuild

pool:

vmImage: 'Ubuntu 16.04' # other options: 'macOS-10.13', 'vs2017-win2016'. 'Ubuntu 16.04'

dependsOn: QualityCheck

condition: succeeded('QualityCheck')

steps:

- template: YAML/Builds/DockerBuildAndPublish.yml@templates # Template reference

parameters:

Name: "WebHooksBuildEventsLinux"

dockerimagename: 'webhooksbuildeventslinux'

dockeridacr: $(DockerAdmin) #ACR Admin User

dockerpasswordacr: $(DockerACRPassword) #ACR Admin Password

dockeracr: 'azuredevopssandbox'

dockerapppath: ' ./AzureDevOpsBuildEvents'

dockerfile: './AzureDevOpsBuildEvents/DockerFile'

The above YAML runs is the entire build pipeline comprised of all jobs that calls each YAML templates. There are 2 sections that I do want to point out:

Resources: This is the part where I refer to the YAML templates stored in a different Git Repo instance within Azure DevOps

resources:

repositories:

- repository: templates # identifier (A-Z, a-z, 0-9, and underscore)

type: git # see below git - azure devops

name: SoftwareTransformation/DevOps # Teamproject/repositoryname (format depends on `type`)

ref: refs/heads/master # ref name to use, defaults to 'refs/heads/master'

Variables: This is the section where I use Azure DevOps pipeline group variables to encrypt docker login information. For more information on this, see: Variable groups

variables:

- group: DockerInfo

The end results. A working pipeline that triggers builds from code that works in your branching strategy of choice. This greatly speeds up the development process without the worry of maintaining manually created build definitions.